Intro to AI Module: Understanding Generative AI

In recent years, Generative AI has emerged as one of the most transformative forces in technology, reshaping how we create, interact, and innovate. From producing human-like text to generating realistic images, videos, and even music, generative models are redefining what machines can do. But what exactly is generative AI, and why is it such a big deal in 2025?

This guide breaks down the fundamentals, evolution, and practical use cases of Generative AI—with examples and expert insights to help you build a solid understanding.

🔍 What Is Generative AI?

Generative AI refers to a class of machine learning models that can generate new content—such as text, images, code, audio, and video—based on learned patterns from massive datasets.

Unlike traditional AI systems that are rule-based or predictive, generative models create. Think of tools like ChatGPT (OpenAI), Bard (Google), and Stable Diffusion—they can write articles, produce artwork, compose music, or simulate conversations.

At its core, generative AI leverages deep learning—especially models like Transformers—to understand and replicate human-like outputs. This makes it incredibly powerful for AI content generation across industries.

🧠 How Does Generative AI Work?

Generative AI relies on a few key techniques:

- Natural Language Processing (NLP): Powers models like ChatGPT to understand and generate human-like language.

- Transformer Architectures: The foundation of LLMs (Large Language Models) like GPT-4 and Google PaLM, enabling models to process and generate contextually rich content.

- Training on Massive Datasets: LLMs are trained on diverse text, image, and code repositories—learning patterns, syntax, and semantics.

Examples:

- GPT (Generative Pre-trained Transformer) by OpenAI can write essays, emails, or code.

- DALL·E 3 can create high-quality images from text prompts.

- Google DeepMind’s Gemini offers advanced multimodal capabilities, combining vision, language, and reasoning.

🛠️ Popular AI Tools for Creators in 2025

With the explosion of generative AI, creators now have access to powerful tools:

- ChatGPT (OpenAI): Writing, research, brainstorming.

- Midjourney / DALL·E: AI-generated art and design.

- RunwayML: Video generation and editing.

- Notion AI / Jasper: Content writing and marketing automation.

- GitHub Copilot: AI-assisted coding.

These tools empower writers, designers, marketers, developers, and educators to automate tasks, boost creativity, and deliver at scale.

🚀 From NLP to LLMs: A Quick Evolution

The journey of generative AI began with simple N-Gram models and Recurrent Neural Networks (RNNs). However, breakthroughs in Transformer models (first introduced in the 2017 “Attention Is All You Need” paper) paved the way for today’s LLMs.

Notable advancements:

- GPT-2 and GPT-3 revolutionized text generation.

- GPT-4 added reasoning, multilingual capabilities, and multimodal inputs.

- RAG (Retrieval-Augmented Generation) techniques now blend LLMs with real-time data for factually grounded answers.

- Companies like NVIDIA, Anthropic, and Meta AI are building foundation models with billions of parameters, fueling the AI race.

⚙️ Training Generative AI: Supervised vs Semi-Supervised

Training LLMs is computationally intensive and data-heavy. There are two primary methods:

- Supervised Learning: Trained with labeled datasets (input-output pairs).

- Semi-Supervised Learning: Uses a smaller set of labeled data and a larger set of unlabeled data, offering efficiency and scalability.

Organizations like OpenAI and Google AI now use reinforcement learning and human feedback to improve the alignment and ethical behavior of these models.

🧩 Prompt Engineering, Fine-Tuning & RAG

Three techniques to optimize AI output:

- Prompt Engineering: Designing effective prompts for best responses.

- Fine-Tuning: Adapting a pre-trained model to a specific use-case or dataset.

- RAG (Retrieval-Augmented Generation): Merges real-time knowledge retrieval with generative power for accurate, up-to-date results.

These methods make AI more accurate, relevant, and domain-specific.

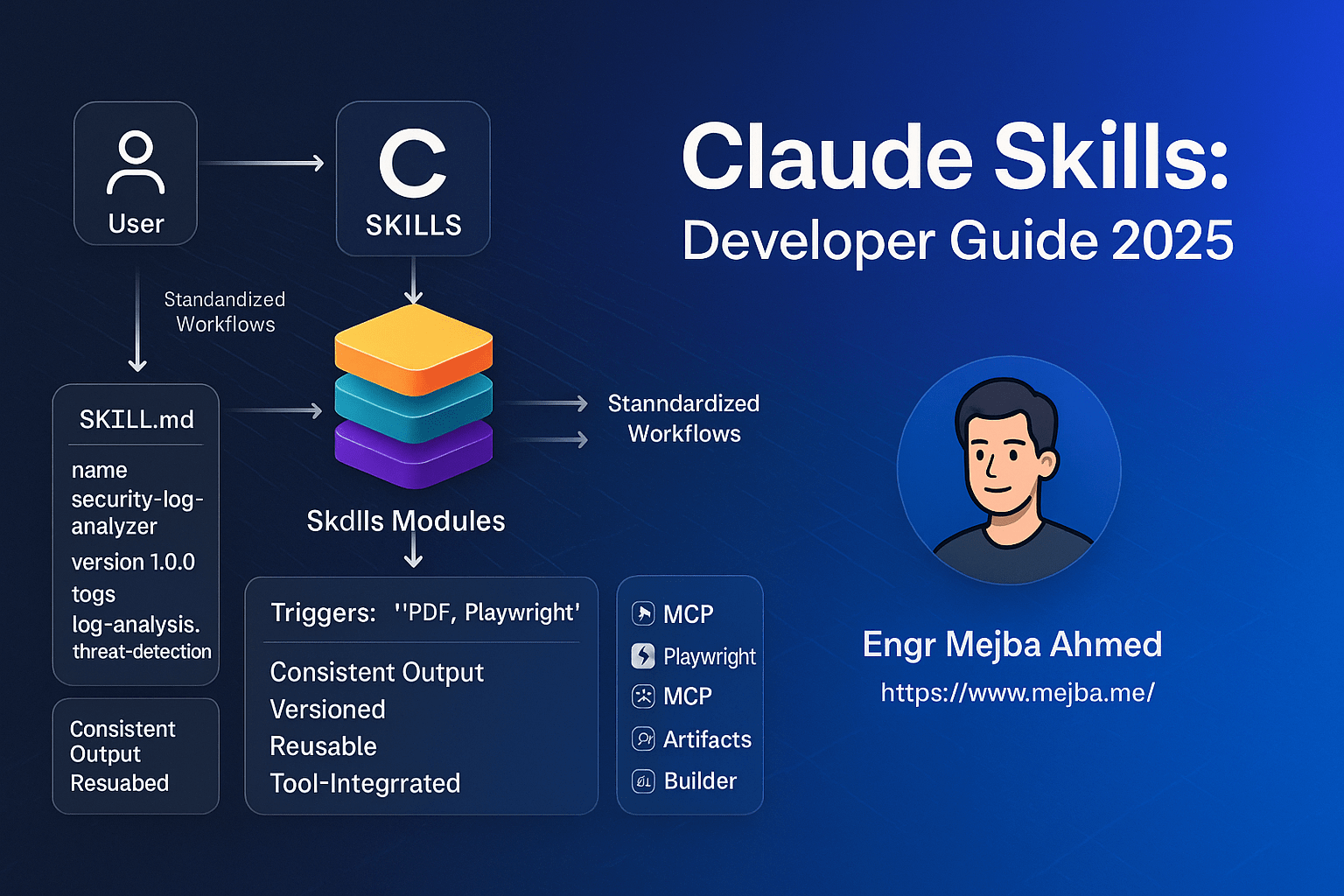

🧱 Foundation Models: The New AI Infrastructure

Foundation models are large, pre-trained AI models that can be adapted for a wide range of tasks—writing, translation, image creation, and more.

Examples:

- GPT-4 (OpenAI)

- Claude (Anthropic)

- Gemini (Google DeepMind)

They serve as the base layer, which can either be used off-the-shelf or fine-tuned for specific business needs.

🏗️ Buy vs Make: Should You Build Your Own AI Model?

Companies face a key choice:

- Buy (use existing foundation models): Faster, cost-effective, scalable.

- Make (train your own private models): Greater control, data privacy, industry-specific optimization.

In most cases, startups and creators opt for APIs from providers like OpenAI, Hugging Face, or Cohere—balancing performance and cost.

🌐 Use Cases Across Industries

Generative AI is transforming sectors:

- Healthcare: Clinical documentation, patient support, drug discovery.

- Finance: Fraud detection, report generation, chatbot assistants.

- Marketing: Content generation, A/B testing, personalization.

- Education: AI tutors, content summarization, adaptive learning.

- Entertainment: Scriptwriting, virtual actors, immersive game design.

🛡️ Challenges: Ethics, Bias & Security

While generative AI offers endless potential, it also raises concerns:

- Bias in training data can lead to discriminatory outputs.

- Misinformation risks from deepfakes and hallucinated text.

- Security & misuse—AI-generated malware or spam.

Industry leaders are actively working on AI alignment, transparent model reporting, and ethical frameworks to address these issues.

✅ Conclusion: The Future of AI Is Generative

As we step further into 2025, Generative AI is no longer a futuristic concept—it’s a core part of how we work, create, and solve problems. Whether you’re a developer, creator, student, or business leader, now is the time to understand, experiment with, and responsibly harness the power of generative AI.

Stay curious. Stay ethical. And start building with AI.