Claude Code Agent Swarm: How Autonomous Task Orchestration Changes Everything

I've been running Claude Code since its early days. Back then, working with it felt like managing an enthusiastic junior developer — capable but requiring constant oversight. You'd delegate a task, watch it work, then step in to redirect when context got lost or the session crashed. The workflow was powerful but fragile.

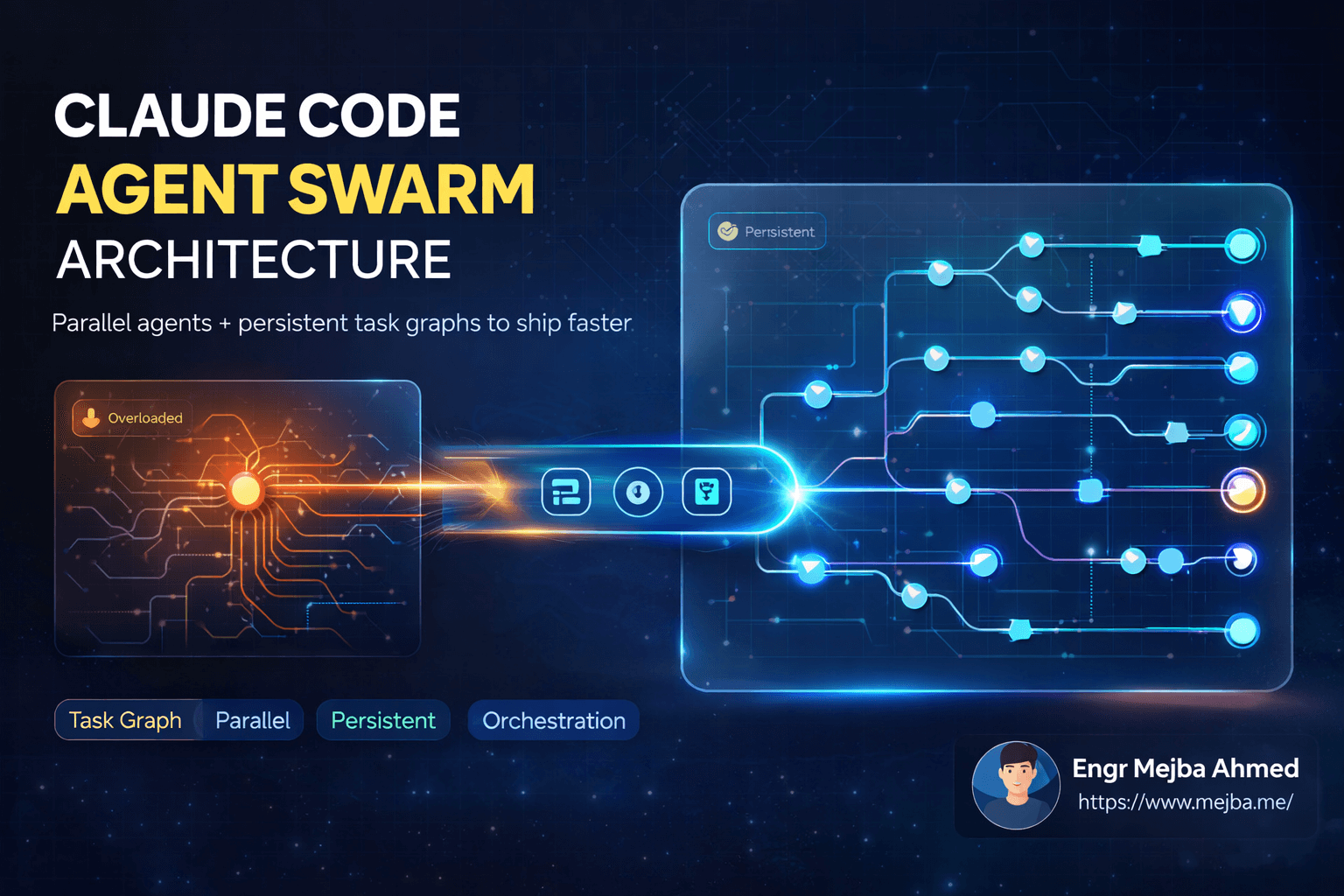

That changed fundamentally with the agent swarm update. What Anthropic shipped isn't just an incremental improvement. It's a complete architectural reimagining of how AI handles complex, multi-step work. Instead of one agent struggling to hold everything in memory, Claude now coordinates multiple specialized sub-agents working in parallel, each with its own context window, managed through persistent task graphs that survive session restarts.

After spending weeks pushing this system through real development scenarios, I can say this: Claude Code has evolved from a tool into something closer to a project manager. Let me show you what that actually means in practice.

The Old Way vs. The Swarm Architecture

Understanding what changed requires knowing what came before.

Previous Claude Code versions operated as a single agent with a single context window. Every task, every file read, every code generation consumed tokens from the same 200K pool. Complex projects would eat through context quickly, forcing constant compactions — those moments where Claude summarizes everything to free up memory, often losing crucial details in the process.

You'd also face the re-anchoring problem. Clear your session or restart, and Claude lost all context about what it was doing. Starting fresh meant re-explaining the entire project state, which files mattered, what had been completed, what remained.

The agent swarm architecture eliminates both problems.

Now, when Claude faces a complex task, it spawns multiple sub-agents. Each operates with its own isolated 200K token context window. The parent agent coordinates work distribution while sub-agents execute independently. Context isolation means one agent exploring your codebase doesn't consume tokens from another agent writing implementation code.

But the real breakthrough is the task graph system. Instead of keeping task state in volatile memory, Claude now writes dependencies to JSON files stored in session-specific folders. Each task has a unique ID, a status, and two critical fields: "blocks" (tasks waiting on this one to complete) and "blocked by" (tasks that must finish first). This dependency graph lives on disk, independent of any agent's memory.

The implications are significant. You can clear your session, restart Claude, even come back the next day — and the system knows exactly where you left off. No re-anchoring. No lost progress. The task graph is the source of truth.

How Parallel Execution Transforms Throughput

Let me illustrate with a concrete example.

Imagine you need Claude to implement eight independent API endpoints. In the old model, Claude would approach this sequentially: implement endpoint one, test it, move to endpoint two, and so on. Eight endpoints, eight sequential steps, each consuming from the same context window.

With the agent swarm, Claude recognizes these tasks have no dependencies on each other. They can run in parallel. It spawns multiple sub-agents, each taking one or more endpoints, and they work simultaneously. What took eight sequential cycles now completes in one parallel batch.

The math becomes dramatic at scale. A project with 16 independent tasks? Old Claude: 16 sequential steps minimum. Swarm Claude: potentially one cycle if resources allow, or at least massively reduced through parallel batching.

I watched this happen on a real refactoring project. Claude broke down the work into 12 sub-tasks, identified dependencies (only three tasks actually blocked others), and dispatched the remaining nine tasks to parallel agents. The entire refactor completed in a fraction of the time the old approach would have required.

The task graph made it possible. By explicitly modeling which tasks block which others, Claude can make intelligent parallelization decisions rather than defaulting to sequential safety.

Model Specialization: Right Tool for Right Job

The swarm doesn't just parallelize — it specializes.

Claude Code now assigns different AI models based on task complexity. Opus 4.5 handles heavy reasoning work: architectural decisions, complex algorithm implementation, nuanced refactoring. But when tasks involve simpler exploration — reading files, searching codebases, gathering context — Claude dispatches Haiku or Sonnet models instead.

This isn't just about speed. It's economic efficiency. Opus tokens cost more than Haiku tokens. Running a full Opus model to grep through files is wasteful when a lighter model handles it perfectly well.

In practice, you'll see the main orchestrating agent running on Opus while exploration agents use Haiku. The main agent receives digested information back, makes decisions, then dispatches more specialized work appropriately. It's like having a senior architect who delegates research to junior developers but handles the critical design decisions personally.

The result: faster execution, lower costs, and no degradation in output quality. The system matches computational power to actual task requirements.

Persistence Changes Everything About Long Projects

Here's where the architecture really shines for real-world development.

I work on projects that span days or weeks. Previously, every new Claude session meant re-establishing context. Even with project files and CLAUDE.md, there was overhead in bringing the AI up to speed on what had been done and what remained.

The persistent task graph eliminates this entirely.

Tasks are stored as JSON in session folders, identified by session ID or custom names you can assign. When you return to Claude, it reads the task graph from disk, understands the current state instantly, and resumes work without missing a beat.

I tested this deliberately. Started a complex feature implementation, completed roughly 40% of the sub-tasks, then completely cleared my session. Came back the next day, asked Claude to continue the implementation. It loaded the task graph, identified the remaining work, and picked up exactly where it left off. No re-explanation needed.

This persistence also provides something valuable: an audit trail. The task files show what was attempted, what succeeded, what remains. If something goes wrong, you can examine the task graph to understand the execution flow. It's debugging for AI-assisted development.

Co-work: The Swarm for Non-Developers

Anthropic recognized that this coordination pattern isn't just for code.

They released Co-work, which applies the same agent swarm architecture to general computer tasks. Non-developers can now delegate complex work — research projects, document organization, presentation creation — and watch multiple agents execute in parallel with the same persistent task management.

The key difference: Co-work adds safety guardrails. It operates in a more controlled environment with explicit permissions for file system access. This makes sense for less technical users who might not fully understand what "unrestricted file system access" implies.

I've used Co-work for market research tasks. Describe the research question, and it spawns agents to gather information from different sources, synthesize findings, and produce formatted reports. The task graph shows research subtopics being explored in parallel, then merging into final deliverables.

For teams with mixed technical capabilities, having both Claude Code (full power, developer-focused) and Co-work (guardrailed, general-purpose) means everyone can leverage the swarm architecture appropriately.

Implementing Task Breakdown in Your Workflow

Sometimes Claude doesn't automatically break down tasks. When facing a complex request, you might need to explicitly prompt for task graph creation.

The approach I've found effective: describe the end goal, then ask Claude to create a dependency graph before executing anything. Something like:

"I need to implement user authentication with OAuth, session management, and protected routes. Before starting, break this into sub-tasks with explicit dependencies. Show me which tasks can run in parallel versus which must be sequential."

Claude will generate a structured breakdown, often revealing parallelization opportunities you hadn't considered. Review it, adjust if needed, then give the green light for execution.

You can also examine task graphs directly. The JSON files are human-readable. Each task shows its ID, description, status, and dependency relationships. If you want to modify execution order or adjust priorities, you can edit these files between sessions.

This gives you a level of control that didn't exist before. The AI proposes execution strategy, you review and approve, then watch parallel execution unfold according to the plan you've validated.

Practical Tips from Production Use

After extensive use, several patterns have emerged for getting the most from the swarm architecture.

Start broad, let Claude decompose. Rather than micromanaging individual tasks, describe the overall objective and let the task breakdown happen organically. Claude often identifies sub-tasks and parallelization opportunities that humans miss.

Use custom session names for projects. Instead of relying on auto-generated session IDs, name your sessions meaningfully. This makes returning to specific projects easier and keeps task graphs organized.

Review dependency graphs for complex work. Before letting agents execute, examine the proposed task structure. Occasionally Claude misses dependencies or could parallelize more aggressively. A quick review catches these before execution.

Trust but verify parallel execution. When multiple agents work simultaneously, merge conflicts or consistency issues can arise. Have Claude run validation steps after parallel batches complete.

Leverage model specialization consciously. If you know certain tasks are exploration-heavy, explicitly note this. Claude will route to lighter models more aggressively when it understands the task nature.

Don't fear session restarts. The persistent task graph means you can restart freely without losing progress. If something goes wrong or context seems confused, clearing the session and letting Claude reload from the task graph often resolves issues cleanly.

The Bigger Picture: AI as Project Manager

What strikes me most about this update isn't any individual feature — it's the fundamental shift in interaction model.

Previous AI assistants, even capable ones, required constant human orchestration. You were the project manager, breaking down work, sequencing tasks, maintaining state in your own head, and directing the AI step by step.

The agent swarm inverts this. You become the stakeholder describing outcomes, and Claude becomes the project manager figuring out how to achieve them. It handles decomposition, dependency management, resource allocation (model selection), parallel coordination, and progress tracking.

This changes what's practical to delegate. Tasks that previously required too much oversight to be worth delegating now become candidates for AI execution. The coordination overhead that made AI assistance net-negative for some workflows disappears.

I've found myself approaching projects differently. Instead of thinking "what can I have Claude help with?" I now think "what's the final outcome I need?" and let the system figure out the path. Sometimes that path involves parallel agents I wouldn't have thought to deploy. Sometimes it involves task decomposition more granular than I'd have created.

The AI isn't just executing tasks — it's managing the execution itself.

What This Means for AI Development Going Forward

The agent swarm pattern Claude pioneered will likely become standard. Once you've experienced parallel execution with persistent state, single-agent sequential processing feels primitive.

I expect to see competing AI systems adopt similar architectures. The advantages are too significant to ignore: better context management, natural parallelization, resilience through persistence, and more efficient resource utilization through model specialization.

For developers, this means expanding what we consider "AI-automatable." Tasks requiring multi-step coordination, previously too complex for reliable AI assistance, become practical. The ceiling on AI capability rises not through smarter models but through smarter orchestration of existing models.

For businesses, this suggests reconsidering AI's role in workflows. The agent swarm can handle project-level complexity, not just task-level assistance. Delegating entire initiatives becomes feasible in ways that weren't before.

We're watching AI assistants evolve from tools you use into systems you manage. The swarm is an early indicator of that trajectory, and from what I've seen, the trajectory is accelerating.

Getting Started Today

If you're already using Claude Code, the agent swarm capabilities are available now. Start with a moderately complex task and explicitly request task breakdown. Watch how Claude decomposes work, assigns dependencies, and coordinates execution.

If you're new to Claude Code, this is an excellent time to start. The learning curve is gentler when the system handles coordination overhead. You can focus on describing objectives rather than managing execution details.

For non-developers interested in AI automation, explore Co-work. The guardrailed environment makes experimentation safer while delivering the same parallel execution benefits.

The agent swarm represents where AI assistance is heading: autonomous, persistent, intelligently coordinated. The teams and individuals who learn to work with these systems now will have significant advantages as the technology matures.

Because this isn't about AI replacing human work. It's about AI handling the coordination complexity that previously limited what any individual could accomplish. The swarm multiplies your leverage. The question is just what you'll do with it.

Let's Work Together

Looking to build AI systems, automate workflows, or scale your tech infrastructure? I'd love to help.

- Fiverr (custom builds & integrations): fiverr.com/s/EgxYmWD

- Portfolio: mejba.me

- Ramlit Limited (enterprise solutions): ramlit.com

- ColorPark (design & branding): colorpark.io

- xCyberSecurity (security services): xcybersecurity.io